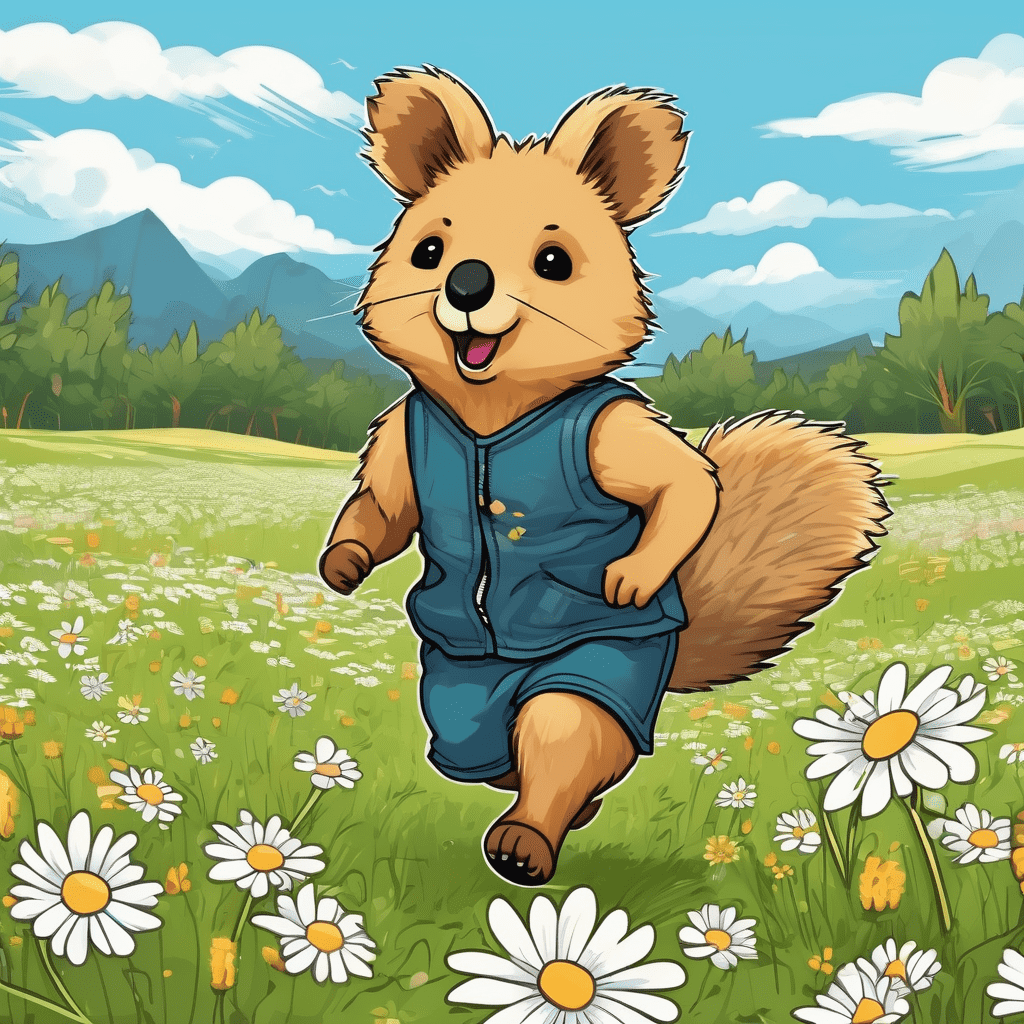

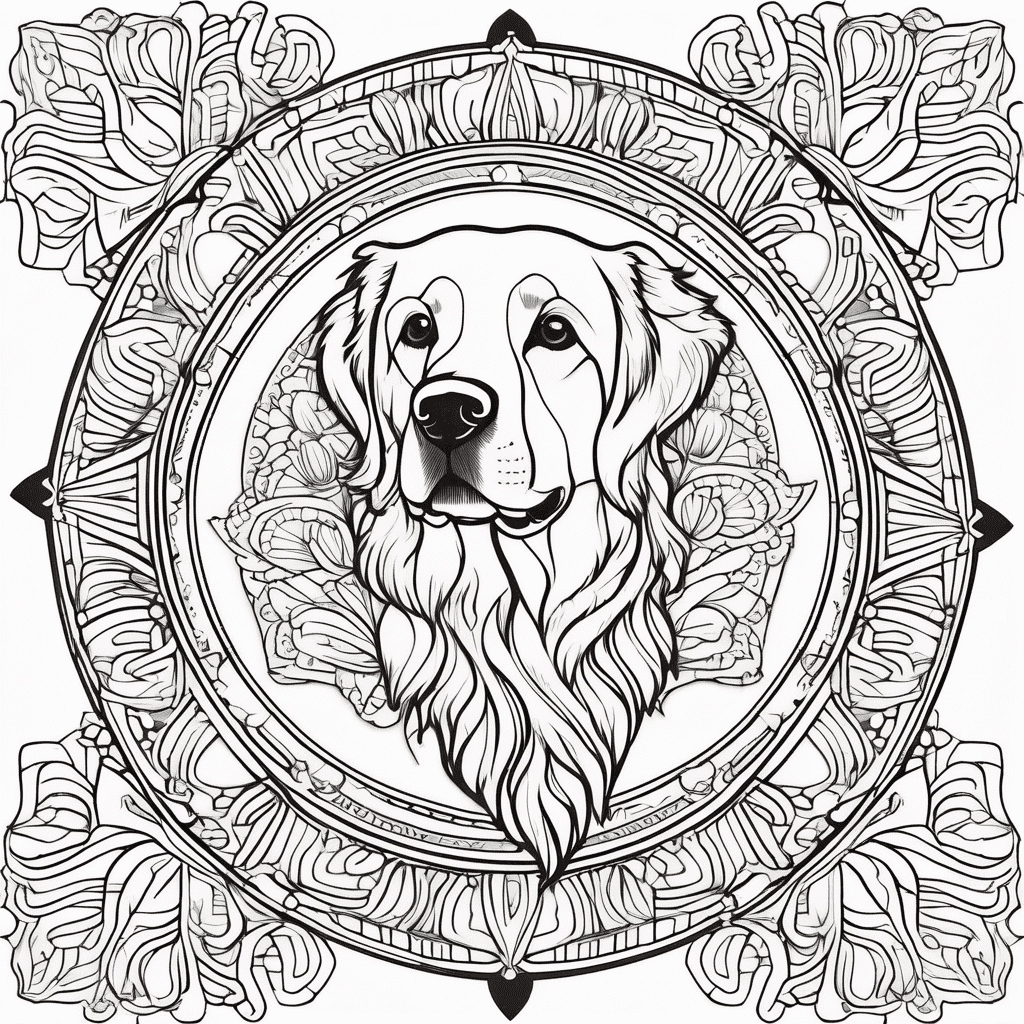

Text To Image with AI Art Generator

AI Art Made Simple with Stable Diffusion Online

Stable Diffusion Online revolutionizes AI art creation, offering a straightforward platform for both beginners and experts. It's the perfect tool for anyone eager to explore AI stable diffusion without needing deep technical knowledge. The interface is designed for ease, enabling users to quickly turn ideas into compelling visuals. It's not just an AI image generator; it's a bridge connecting creativity and technology, ensuring that the art creation process is both enjoyable and accessible. Whether you're dabbling in digital art for the first time or are a seasoned artist, Stable Diffusion Online brings your visions to life effortlessly, making it a go-to solution for online stable diffusion art creation.

Enhance Artistry with Stable Diffusion AI Image Generator

The Stable Diffusion AI Image Generator is a powerhouse for artists and designers, designed to produce complex, high-quality images. This tool is ideal for those who aim to integrate AI into their creative process, offering unmatched detail and customization. It's more than an AI stable diffusion tool; it's a partner in creativity, bringing to life detailed landscapes, intricate designs, and conceptual art with precision. Whether for digital marketing or personal projects, this AI image generator facilitates the creation of unique, impactful artworks. The Stable Diffusion AI Image Generator stands out for its ability to handle intricate artistic visions, making it an indispensable tool for anyone seeking to harness the potential of AI diffusion in their art.

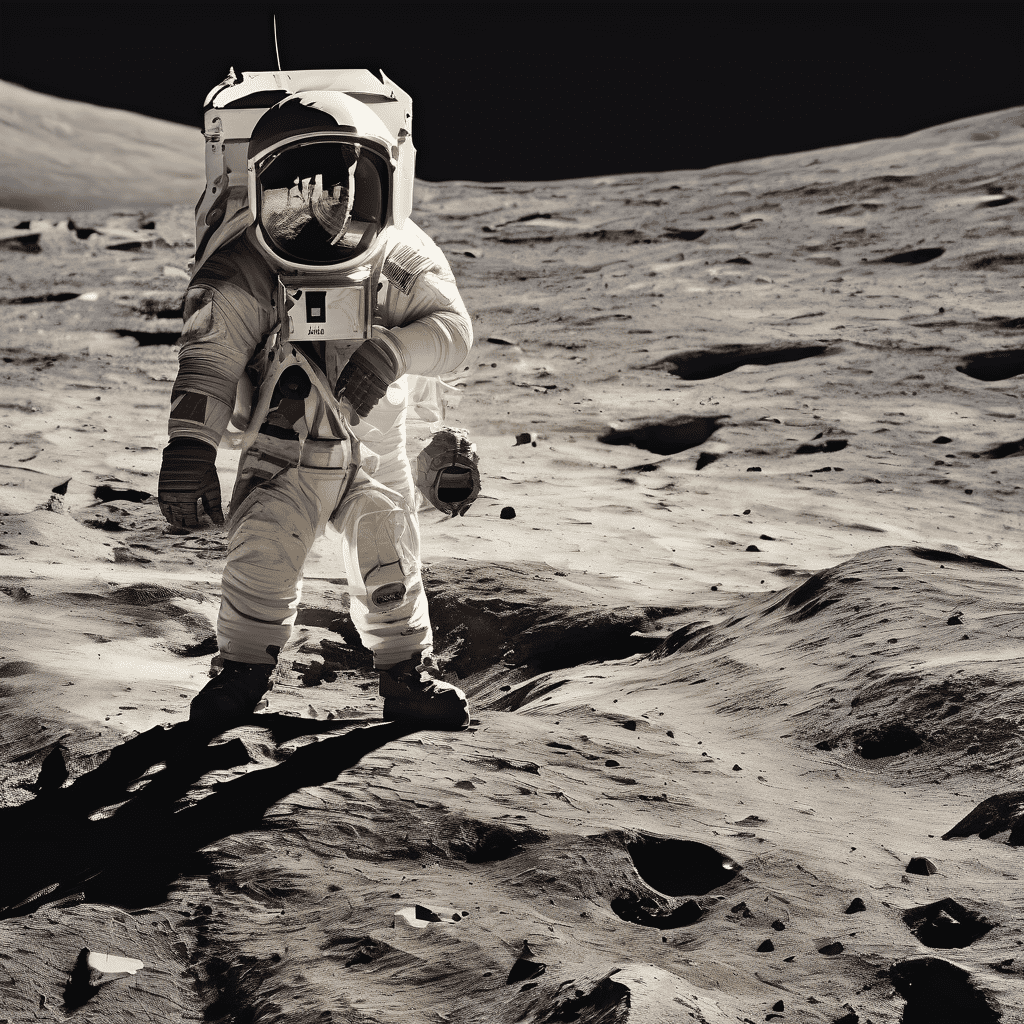

High-Resolution AI Art with Stable Diffusion XL Online

Stable Diffusion XL Online elevates AI art creation to new heights, focusing on high-resolution, detailed imagery. This platform is tailor-made for professional-grade projects, delivering exceptional quality for digital art and design. Specializing in ultra-high-resolution outputs, it's the ideal tool for producing large-scale artworks and detailed digital pieces. Stable Diffusion XL Online is more than just a stablediffusion online tool; it's a gateway to exploring vast creative possibilities with AI. It excels in transforming complex ideas into high-quality visual masterpieces, perfect for both commercial and artistic endeavors. Embrace the future of high-resolution AI art creation with Stable Diffusion XL Online, where every detail is rendered with stunning clarity and precision.

Stable Diffusion XL Model

Stable Diffusion XL 1.0 (SDXL) is the latest version of the AI image generation system Stable Diffusion, created by Stability AI and released in July 2023. SDXL introduces major upgrades over previous versions through its 6 billion parameter dual model system, enabling 1024x1024 resolution, highly realistic image generation, legible text capabilities, simplified prompting with fewer words, and built-in preset styles.StableDiffusion XL represents a significant leap in AI image generation quality, flexibility and creative potential compared to prior Stable Diffusion versions.

What is stable diffusion AI

Stable Diffusion is an open-source AI system for generating realistic images and editing existing images. It uses a deep learning model trained on millions of image-text pairs. Given a text prompt, Stable Diffusion creates images that match the description. Users can access it through websites like Stablediffusionai.ai or run it locally. Stable Diffusion represents a breakthrough in publicly available AI image generation. Despite limitations like training data bias, it provides artists and creators with an unprecedented level of creative freedom. When used responsibly, Stable Diffusion has exciting potential for art, media, and more.

How to use stable diffusion AI

Stable Diffusion is an AI image generator. To use it, go to stabledifussionai.ai. Type a text prompt describing the image you want to create. Adjust settings like image size and style. Click "Dream" to generate images. Select your favorite image and download or share it. Refine prompts and settings to get desired results. You can also edit images with inpainting and outpainting features. Stable Diffusion provides great creative freedom if used thoughtfully.

How to download stable diffusion AI

Stable Diffusion is available on GitHub. To download it, go to github.com/CompVis/stable-diffusion and click the green "Code" button. Select "Download ZIP" to download the source code and model weights. Extract the ZIP file after downloading. You'll need at least 10GB free disk space. To use Stable Diffusion, you'll also need Python and a GPU with at least 4GB of VRAM. Alternatively, you can access Stable Diffusion through websites like Stablediffusionai.ai without installing it locally. Stable Diffusion provides unprecedented access to a powerful AI image generator. Download it to unleash your creativity.

How to install stable diffusion AI?

To install Stable Diffusion, you need a PC with Windows 10 or 11, a GPU with at least 4GB VRAM, and Python installed. Download the Stable Diffusion code repository and extract it. Get the pretrained model checkpoint file and config file and place them in the proper folders. Run the webui-user.bat file to launch the web UI. You can now generate images by typing text prompts. Adjust settings like sampling steps and inference steps. Install extensions like Automatic1111 for more features. With the right setup, you can locally run this powerful AI image generator.

How to train stable diffusion AI?

To train your own Stable Diffusion model, you need a dataset of image-text pairs, a GPU with sufficient VRAM, and technical skills. First, prepare and clean your training data. Then modify the Stable Diffusion config files to point to your dataset. Set hyperparameters like batch size and learning rate. Launch training scripts to train the VAE, UNet, and text encoder separately. Training is computationally intensive, so rent a cloud GPU instance if needed. Monitor training progress. After training, evaluate model performance. Fine-tune as needed until you are satisfied. With time, computing power, and effort, you can customize Stable Diffusion for your specific needs.

What is lora stable diffusion

Lora, short for learned regional augmentation, is a technique used to enhance Stable Diffusion models. Loras are small neural networks that are trained on datasets of images to specialize a model in generating specific details like faces, hands, or clothing. To use a lora, download it and place it in the proper folder. In your prompts, add the lora's keyword to activate it. Loras provide more control over details without retraining the whole model. They allow customizing Stable Diffusion for generating anime characters, portraits, fashion models, and more. With the right loras, you can push Stable Diffusion to new levels of detail and customization.

What is a negative prompt stable diffusion AI

In Stable Diffusion, negative prompts allow you to specify things you don't want to see in a generated image. They are simply words or phrases that tell the model what to avoid. For example, adding "poorly drawn, ugly, extra fingers" as a negative prompt reduces the chance of those unwanted elements appearing. Negative prompts provide more control over the image generation process. They are useful for excluding common flaws like distortions or artifacts. Specifying negative prompts along with positive prompts helps steer the model towards your desired output. Effective use of negative prompts improves the quality and accuracy of images produced by Stable Diffusion.

Does stable diffusion AI need internet

Stable Diffusion can be run fully offline once installed locally. The only internet access needed is to initially download the source code and model files. After setup, you can generate images through the local web UI without an internet connection. Stable Diffusion runs inferences completely on your local GPU. This makes it more private and secure compared to cloud-based services. However, accessing Stable Diffusion through websites does require constant internet access. Running locally avoids this, enabling use on flights, in remote areas, or wherever internet is limited. So while web access does need internet, Stable Diffusion itself does not require an online connection when self-hosted.

How to use embeddings stable diffusion AI

Embeddings allow Stable Diffusion models to generate images mimicking a specific visual style. To use embeddings, first train them on a dataset of images representing the desired style. Place the embedding file in the embeddings folder. In your text prompt, add the embedding's name surrounded by colon brackets like [:Name:] to activate it. Stable Diffusion will generate images matching that style. Adjust the strength parameter to control the effect. Embeddings are powerful for getting consistent outputs. With the right embeddings, you can customize Stable Diffusion for particular artwork, aesthetics, and other visual styles.

Frequently Ask Questions

What are 'Stable difusion' and 'Stable difussion'?

'Stable difusion' and 'Stable difussion' are typographical errors of 'Stable Diffusion.' There are no separate platforms with these names. 'Stable Diffusion' is the correct term for the AI art generation tool known for transforming text into images. These misspellings are common but refer to the same technology.

How does Stability Diffusion XL relate to Stable Diffusion?

Stability Diffusion XL is an advanced version of Stable Diffusion, specialized in creating high-resolution images. While Stable Diffusion focuses on AI-generated art, Stability Diffusion XL enhances this with greater detail and clarity, ideal for high-quality, professional projects.

Introduction to Stable Diffusion

Stable Diffusion is an open-source text-to-image generation tool based on diffusion models, developed by CompVis group at Ludwig Maximilian University of Munich and Runway ML, with compute support from Stability AI. It can generate high-quality images from text descriptions and can also perform image inpainting, outpainting and text-guided image-to-image translation. Stable Diffusion has open-sourced its code, pretrained models and license, allowing users to run it on a single GPU. This makes it the first open-source deep text-to-image model that can run locally on user devices.

How Stable Diffusion Works ?

Stable Diffusion uses a diffusion model architecture called Latent Diffusion Models (LDM). It consists of 3 components: a variational autoencoder (VAE), a U-Net and an optional text encoder. The VAE compresses the image from pixel space to a smaller latent space, capturing more fundamental semantic information. Gaussian noise is iteratively added to the compressed latent during forward diffusion. The U-Net block (consisting of a ResNet backbone) denoises the output from forward diffusion backwards to obtain a latent representation. Finally, the VAE decoder generates the final image by converting the representation back to pixel space. The text description is exposed to the denoising U-Nets via a cross-attention mechanism to guide image generation.

Training Data for Stable Diffusion

Stable Diffusion was trained on the LAION-5B dataset, which contains image-text pairs scraped from Common Crawl. The data was classified by language and filtered into subsets with higher resolution, lower chances of watermarks and higher predicted "aesthetic" scores. The last few rounds of training also dropped 10% of text conditioning to improve Classifier-Free Diffusion Guidance.

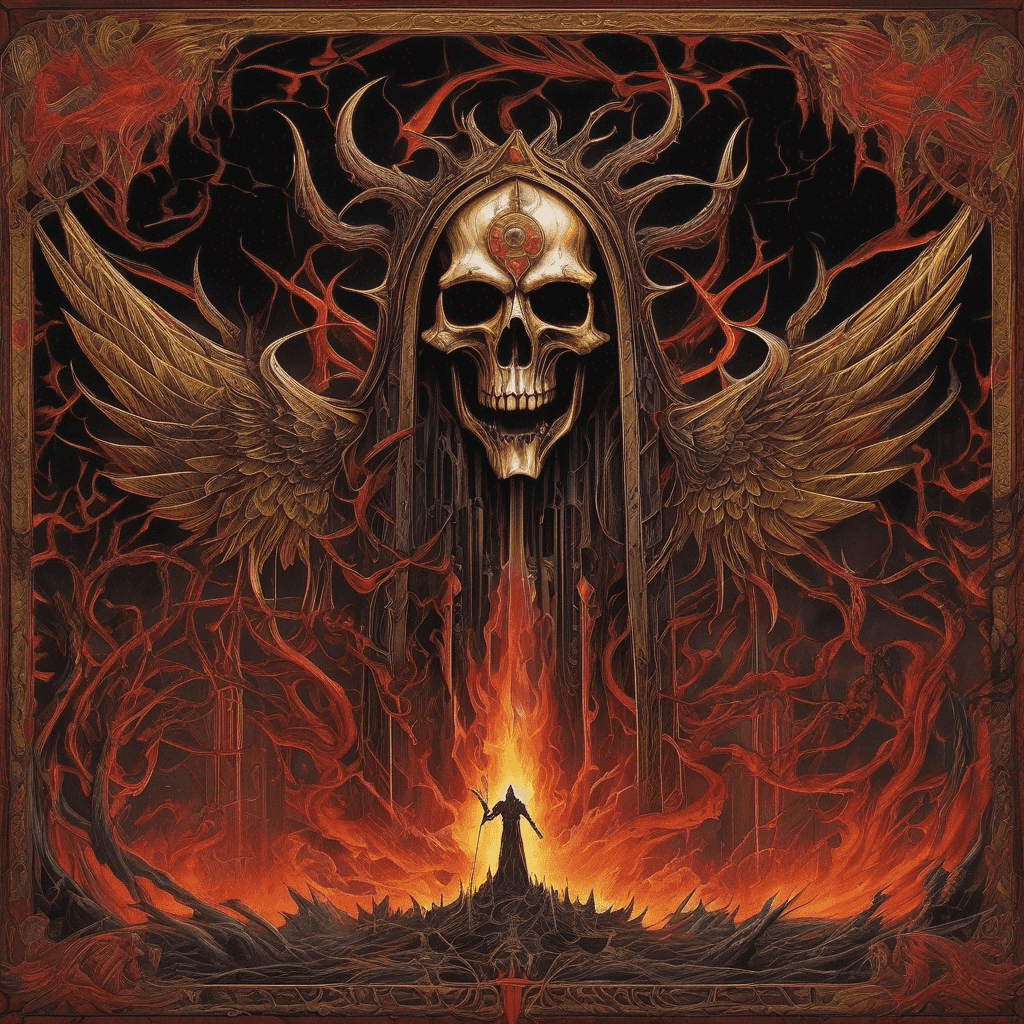

Capabilities of Stable Diffusion

Stable Diffusion can generate new images from scratch based on text prompts, redraw existing images to incorporate new elements described in text, and modify existing images via inpainting and outpainting. It also supports using "ControlNet" to change image style and color while preserving geometric structure. Face swapping is also possible. All these provide great creative freedom to users.

Accessing Stable Diffusion

Users can download the source code to set up Stable Diffusion locally, or access its API through the official Stable Diffusion website Dream Studio. Dream Studio provides a simple and intuitive interface and various setting tools. Users can also access Stable Diffusion API through third-party sites like Hugging Face and Civitai, which provide various Stable Diffusion models for different image styles.

Limitations of Stable Diffusion

A major limitation of Stable Diffusion is the bias in its training data, which is predominantly from English webpages. This leads to results biased towards Western culture. It also struggles with generating human limbs and faces. Some users also reported Stable Diffusion 2 performs worse than Stable Diffusion 1 series in depicting celebrities and artistic styles. However, users can expand model capabilities via fine-tuning. In summary, Stable Diffusion is a powerful and ever-improving open-source deep learning text-to-image model that provides great creative freedom to users. But we should also be mindful of potential biases from the training data and take responsibility for the content generated when using it.

,%20(Masterfully%20crafted%20Glow),%20hyper%20details,(delicate%20detailed).png)

%20as%20Harley%20Quinn%20(DC%20Comics).png)

);%20blue%20eyes.png)